Table of Contents

- 1 What is Operating Systems?

- 2 Functions of Operating Systems

- 3 Process Management

- 4 Memory Management

- 5 File System Management

- 6 I/O System Management

- 7 Communication Management

-

8 Network Management

- 8.1 File Services

- 8.2 Message Services

- 8.3 Security and Network Management Services

- 8.4 Printer Services

- 8.5 Remote Procedure Calls (RPCs)

- 8.6 Remote Processing Services

- 8.7 What is an Operating System?

- 8.8 What are the main functions of an operating system?

- 8.9 What is CPU scheduling?

- 8.10 What is the role of network management?

- 8.11 How is the file system managed by the OS?

- 8.12 What is process management in os?

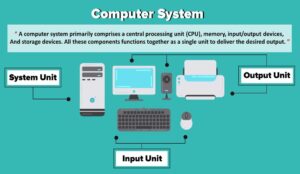

What is Operating Systems?

An operating system is the system software that manages and controls the activities of the computer. Operating System makes a computer system user friendly. It becomes easier for the user to communicate.

Table of Contents

This is the most important program which runs on a computer system and the execution of all other programs depends on it.

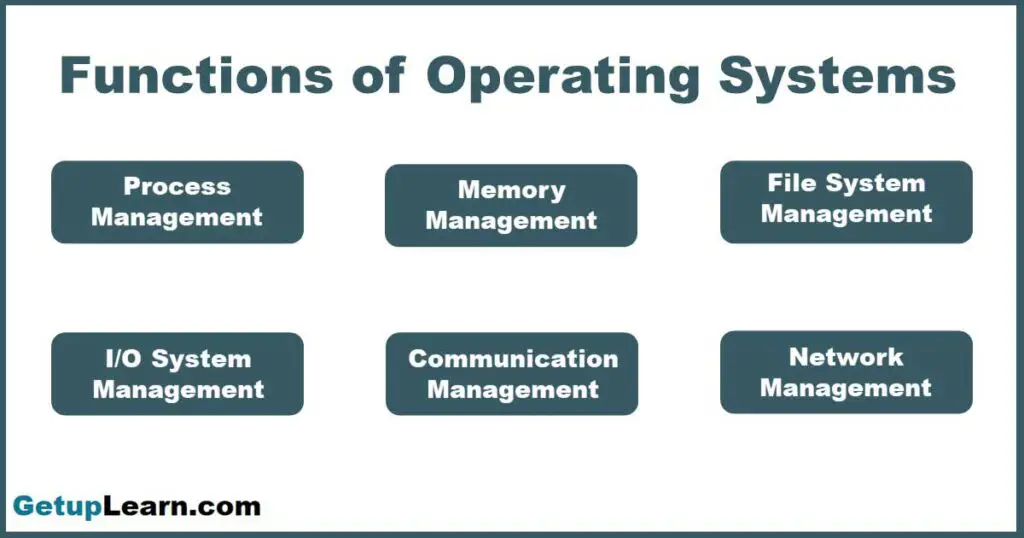

Functions of Operating Systems

This section describes the fundamental technologies of the main functions of operating systems in modern operating systems.

The basic functions of operating systems can be classified as:

- Process Management

- Memory Management

- File System Management

- I/O System Management

- Communication Management

- Network Management

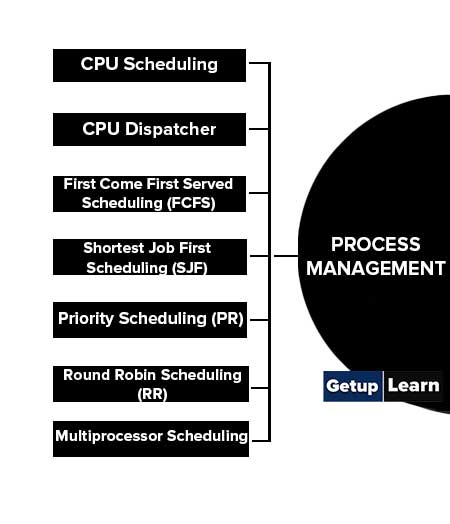

Process Management

A process is an execution of a program on a computer under the support of an operating system. A process can be a system process and a user process. The former executes system code, and the latter a user runs an application.

Processes may be executed sequentially or concurrently depending on the type of operating system. Processes may be executed sequentially or concurrently depending on the type of operating system.

Threads are an important concept of process management in operating systems. A thread is a basic unit of CPU utilization, and a flow of control within a process, supported by a thread control block (TCB) with a thread ID, a set of registers, a program counter, and a stack.

Conventional operating systems are single-thread systems. Multithreaded systems enable the process to control a number of execution threads.

The benefits of multithreaded operating systems and multithreaded programming are responsiveness, resource sharing, implementation efficiency, and utilization of multiprocessor architectures of modern computers.

These are some tasks in process management which are given below:

- CPU Scheduling

- CPU Dispatcher

- First Come First Served Scheduling (FCFS)

- Shortest Job First Scheduling (SJF)

- Priority Scheduling (PR)

- Round Robin Scheduling (RR)

- Multiprocessor Scheduling

CPU Scheduling

CPU scheduling is a fundamental operating system function to maximize CPU utilization. The techniques of multiprogram or multithread are introduced to keep the CPU running different processes and threads on a time-sharing basis. CPU scheduling is the basis of multiprogramming and multithreaded operating systems.

CPU Scheduler: The CPU scheduler is a kernel function of operating systems that selects which process in the ready queue should be run next based on a predefined scheduling algorithm or strategy.

CPU Dispatcher

The CPU dispatcher is a kernel function of operating systems that switch control of the CPU to the process selected by the scheduler.

The typical CPU scheduling algorithms are described as follows.

First Come First Served Scheduling (FCFS)

This algorithm schedules the first process in the ready queue to the CPU based on the assumption that all processes in the queue have an equal priority. FCFS is the simplest scheduling algorithm for CPU scheduling.

The disadvantage of the FCFS algorithm is that if there are long processes in front of the queue, short processes may have to wait for a very long time.

Shortest Job First Scheduling (SJF)

This algorithm gives priority to the short processes, which results in the optimal average waiting time. But the prediction of process length seems a difficult issue by using the SJF strategy.

Priority Scheduling (PR)

This algorithm assigns different priorities to individual processes. Based on this, CPU scheduling will be carried out by selecting the process with the highest priority. The drawback of the priority algorithm is starvation, a term that denotes the indefinite blocking of low priority processes under high CPU load.

To deal with starvation, the aging technique may be adopted that increases the priority levels of low priority processes periodically, so that the executing priorities of those processes will be increased automatically while waiting in the ready queue.

Round Robin Scheduling (RR)

This algorithm allocates the CPU to the first process in the FIFO ready queue for only a predefined time slice, and then it is put back at the tail of the ready queue if it has not yet been completed.

Multiprocessor Scheduling

This algorithm schedules each processor individually in a multiprocessor operating system on the basis of a common queue of processes. In a multiprocessor operating system, processes that need to use a specific device have to be switched to the right processor that is physically connected to the device.

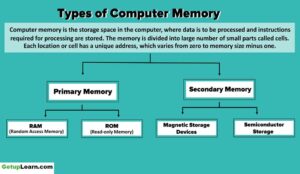

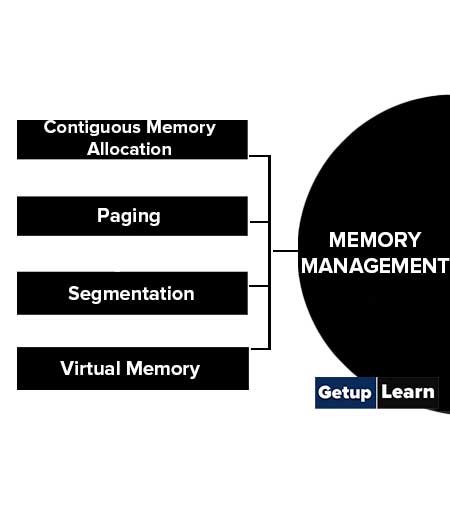

Memory Management

Memory management is one of the key functions of operating systems because memory is both the working space or storage of data and files.

Common memory management technologies of operating systems are:

Contiguous Memory Allocation

The method is used primarily in a batch system where memory is divided into a number of fixed-sized partitions. Contiguous allocation of memory may be carried out by searching a set of holes (free partitions) that best fit the memory requirement of a process.

A number of algorithms or strategies were developed for contiguous memory allocation such as the first-fit, best-fit, and worst-fit algorithms.

Paging

Paging is a dynamic memory allocation method that divides the logical memory into equal blocks known as pages corresponding to physical memory frames. In a paging system, each logical address contains a page number and a page offset.

The physical address is generated via a page table where the base address of an available page is provided. Paging technology is used widely in modern operating systems to avoid the fragmentation problem as found in the early contiguous memory allocation techniques.

Segmentation

This is a memory-management technique that uses a set of segments (logical address spaces) to represent the user’s logical view of memory independent of the physical allocation of system memory. Segments can be accessed by providing their names (numbers) and offsets.

Virtual Memory

When the memory requirement of a process is larger than physical memory, an advanced technique needs to be adopted known as virtual memory, which enables the execution of processes that may not be completely in memory.

The main approach to implementing virtual memory is to separate the logical view of system memory from its physical allocation and limitations. Various technologies have been developed to support virtual memory such as the demand paging and demand segmentation algorithms.

In memory-sharing systems, the sender and receiver use a common area of memory to place the data that is to be exchanged. To guarantee appropriate concurrent manipulation of these shared areas, the operating system has to provide synchronization services for mutual exclusion.

A common synchronization primitive is the semaphore, which provides mutual exclusion for two tasks using a common area of memory. In a shared memory system the virtual memory subsystem must also collaborate to provide the shared areas of work.

File System Management

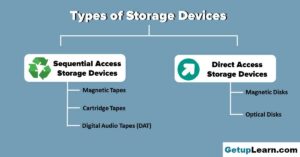

The file system is the most used function of operating systems for non-programming users. A file is a logical storage unit of data or code separated from its physical implementation and location. Types of files can be text, source code, executable code, object code, word processor formatted, or system library code.

The attributes of files can be identified by name, type, location (path of the directory), size, date/time, user ID, and access control information. Logical file structures can be classified as sequential and random files.

The former are files that organize information as a list of ordered records; while the latter are files with fixed-length logical records accessible by their block number. Typical file operations are reading, writing, and appending. Common file management operations are creating, deleting, opening, closing, copying, and renaming.

The file system of an operating system consists of a set of files and a directory structure that organizes all files and provides detailed information about them. The major function of the file management system is to map logical files onto physical storage devices such as disks or tapes. Most file systems organize files by a tree-structured directory.

A file in the file system can be identified by its name and detailed attributes provided by the file directory. The most frequently used method for directory management is the hash table. Although it is fast and efficient, backup is always required to recover a hash table from unpredicted damage.

A physical file system can be implemented by contiguous, linked, and indexed allocation. Contiguous allocation can suffer from external fragmentation. Direct access is inefficient with linked allocation. The indexed allocation may require substantial overheads for its index block.

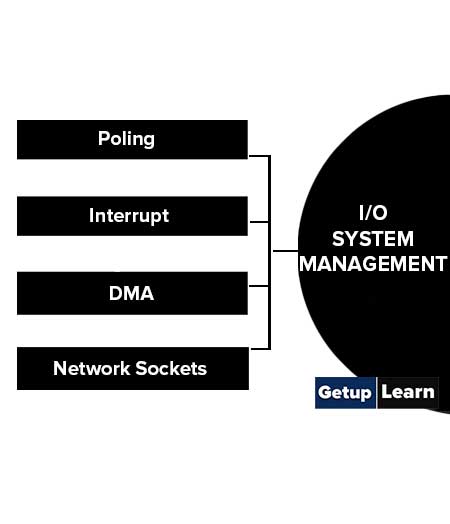

I/O System Management

I/O devices of a computer system encompass a variety of generic and special hardware and interfaces. Typical I/O devices that an operating system deals with. I/O devices are connected to the computer through buses with specific ports or I/O addresses.

Usually, between an I/O device and the bus, there is a device controller and an associated device driver program. The I/O management system of an operating system is designed to enable users to use and access system I/O devices seamlessly, harmoniously, and efficiently.

I/O management techniques of operating systems can be described as follows:

Polling

Polling is a simple I/O control technique by which the operating system periodically checks the status of the device until it is ready before any I/O operation is carried out.

Interrupt

The interrupt is an advanced I/O control technique that lets the I/O device or control equipment notifies the CPU or system interrupt controller whenever an I/O request is occurred or awaiting event is ready.

When an interrupt is detected, the operating system saves the current execution environment, dispatches a corresponding interrupt handler to process the required interrupt, and then returns to the interrupted program.

Interrupts can be divided into different priorities on the basis of processor structures in order to handle complicated and concurrent interrupt requests.

DMA

Direct memory access (DMA) is used to transfer a batch of large amounts of data between the CPU and I/O devices, such as disks or communication ports. A DMA controller is handled by the operating system to carry out a DMA data transfer between an I/O device and the CPU.

Network Sockets

Most operating systems use a socket interface to control network communications. When requested in networking, the operating system creates a local socket and asks the target machine to be connected to establish a remote socket. Then, the pair of computers may communicate by a given communication protocol.

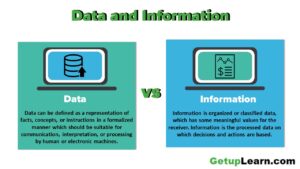

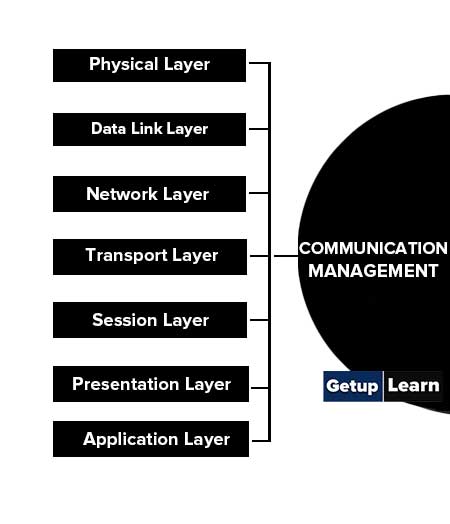

Communication Management

A fundamental characteristic that may vary from system to system is the manner of communication between tasks. The two manners in which this is done are via messages sent between tasks or via the sharing of memory where the communicating tasks can both access the data.

Operating systems can support either. In fact, both manners can coexist in a system. In message-passing systems, the sender task builds a message in an area that it owns and then contacts the operating system to send the message to the recipient.

There must be a location mechanism in the system so that the sender can identify the receiver. The operating system is then put in charge of delivering the message to the recipient.

From the bottom-up of communication management, the seven layers are:

- Physical Layer

- Data Link Layer

- Network Layer

- Transport Layer

- Session Layer

- Presentation Layer

- Application Layer

Physical Layer

Physical layer provides a real communication channel for transmitting bitstream data. Mechanical, electrical, and procedural interfaces for different physical transmission media are specified on this layer.

Data Link Layer

Data link layer implements data frames in the sender end and acknowledges frames in the receiver end, and provides retransmission mechanisms when a frame is not successfully transmitted. The data link layer converts a raw transmission line on the physical layer into a line that is free of transmission errors to the network layer.

Network Layer

Network layer controls the operation of a subnet by determining how message packets are routed from source to destination. It also provides congestion control, information flow counting, and packet size conversion between different networks.

Transport Layer

Transport layer accepts data from the session layer, splits it up into smaller units for adapting to the network layer, and provides an interface between the session layer and the different implementations of the lower layer protocol and hardware.

Session Layer

Session layer provides transport session establishment, synchronization, and data flow control between different machines modeled by the transport layer.

Presentation Layer

Presentation layer converts the original data represented in vendor-dependent format into an abstract data structure at the sender end and vice versa at the receiver end. The presentation layer enables data to be compressed or encoded for transmitting on the lower layers.

Application Layer

Application layer adapts a variety of terminals into a unified network virtual terminal interface for transferring different files between various file systems.

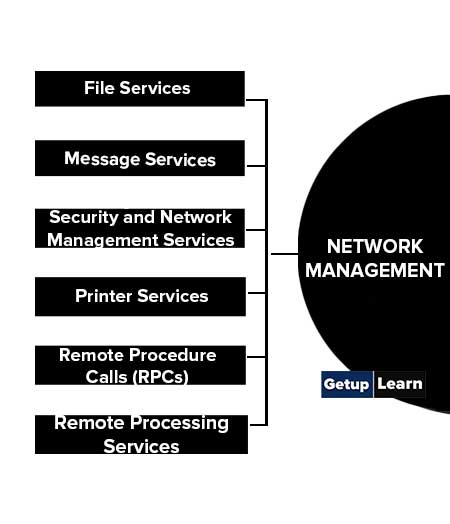

Network Management

A network operating system implements protocols that are required for network communication and provides a variety of additional services to users and application programs. Network operating systems may provide support for several different protocols known as stacks, e.g. a TCP/IP stack and an IPX/SPX stack.

A modern network operating system provides a socket facility to help users to plug in utilities that provide additional services.

Common services that a modern network operating system can provide include:

- File Services

- Message Services

- Security and Network Management Services

- Printer Services

- Remote Procedure Calls (RPCs)

- Remote Processing Services

File Services

File services transfer programs and data files from one computer on the network to another.

Message Services

Message services allow users and applications to pass messages from one computer to another on the network. The most familiar application of massage services is Email and intercomputer talk facilities.

Security and Network Management Services

These services provide security across the network and allow users to manage and control the network.

Printer Services

Printer services enable sharing of expensive printer resources in a network. Print requests from applications are redirected by the operating system to a network workstation, which manages the requested printer.

Remote Procedure Calls (RPCs)

RPCs provide application program interface services to allow a program to access local or remote network operating system functions.

Remote Processing Services

Remote processing services allow users or applications to remotely log in to another system on the network and use its facilities for program execution. The most familiar service of this type is Telnet, which is included in the TCP/IP protocol suite [Comer, 1996/2000] and many other modern network operating systems.

What is an Operating System?

An operating system is the system software that manages and controls the activities of the computer. Operating System makes a computer system user friendly. It becomes easier for the user to communicate.

What are the main functions of an operating system?

The basic functions of operating systems can be classified as:

1. Process Management, 2. Memory Management, 3. File System Management, 4. I/O System Management, 5. Communication Management, 6. Network Management.

What is CPU scheduling?

CPU scheduling is a fundamental operating system function to maximize CPU utilization. The techniques of multiprogram or multithread are introduced to keep the CPU running different processes and threads on a time-sharing basis. CPU scheduling is the basis of multiprogramming and multithreaded operating systems.

What is the role of network management?

Common services that a modern network operating system can provide include:

1. File Services, 2. Message Services, 3. Security and Network Management Services, 4. Printer Services, 5. Remote Procedure Calls (RPCs), 6. Remote Processing Services.

How is the file system managed by the OS?

The file system is the most used function of operating systems for non-programming users. A file is a logical storage unit of data or code separated from its physical implementation and location. Types of files can be text, source code, executable code, object code, word processor formatted, or system library code.

What is process management in os?

These are some tasks in process management which are given below:

1. CPU Scheduling, 2. CPU Dispatcher, 3. First Come First Served Scheduling (FCFS), 4. Shortest Job First Scheduling (SJF), 5. Priority Scheduling (PR), 6. Round Robin Scheduling (RR), 7. Multiprocessor Scheduling.